Start thinking more like an analyst.

Learn data science, natural language processing, and big data analysis essentials at your own pace.

Slower Flights and New Technologies – A Sustainable Aviation Future

A recent study by the University of Cambridge suggests that the aviation industry could make significant strides in reducing its climate impact by implementing several changes, including reducing flight speeds. The research recommends a reduction in flight speeds by approximately 15%, which could decrease fuel burn by 5-7% and subsequently lower aviation emissions.

However, the proposal is not without its potential drawbacks. Slowing down flight speeds could extend transatlantic flights by up to 50 minutes, which will undoubtedly affect passenger travel time. This is a significant consideration, given that time efficiency is one of the primary reasons people choose to travel by air.

The impact of aviation on our climate is significant, accounting for 2.5% of global CO2 emissions. When other climate impacts are taken into consideration, this figure can rise to up to 4%. This is despite the fact that only 10% of the world’s population travels by air.

In response to these environmental concerns, the Cambridge report proposes a five-year plan to help the aviation industry achieve net-zero climate impact by 2050. The plan includes ambitious measures such as eliminating contrails and slowing down flights. Additionally, it suggests implementing new policies for system-wide efficiency gains, reforming Sustainable Aviation Fuel (SAF) policies, and initiating space technology demonstration programs.

The study posits four primary goals to be achieved by 2030. Firstly, it encourages further research into avoiding contrails, which are the streaks of condensed water vapor created in the air by an airplane and are believed to have a warming effect on the planet. Secondly, it calls for better collaborations between governments and the industry. Thirdly, it highlights the need for creating sustainable fuel. Finally, the report advocates for testing new technologies.

Titled ‘Five Years to Chart a New Future for Aviation’, the report identifies three efficiency measures: accelerated replacement of aircraft, reducing flight speed, and operating aircraft closer to their design range. Such changes would require significant collaboration among aviation stakeholders, policymakers, and innovators. Investments in research and development, policy incentives, and public awareness campaigns will be key to achieving these goals.

The study serves as a call to action for the aviation industry, highlighting the urgent need for change and the potential benefits of adopting new practices and technologies. If these recommendations are taken on board, they could pave the way for a more sustainable future for aviation.

Science4Data is committed to cut through greenwashing and measure real impact. Join the journey to a sustainable future. Your actions matter.

Heterogeneous Multi-Agent System Task

Heterogeneous multi-agent systems (MAS) consist of agents with varying capabilities and functionalities, designed to work together to solve complex problems. These systems leverage the unique strengths of each agent, combining diverse skills such as navigation, object manipulation, and resource management to achieve optimal performance. The heterogeneity in these systems allows for more dynamic and flexible solutions, as agents can be assigned tasks that align with their specific abilities. This diversity is crucial in applications ranging from autonomous vehicles to healthcare systems, where different tasks require specialized expertise. By effectively managing and coordinating these varied agents, heterogeneous MAS can significantly enhance productivity and efficiency in various domains.

Heterogeneous Multi-Agent System Task Assignment Strategies

As artificial intelligence (AI) continues to advance, multi-agent systems (MAS) are becoming increasingly popular for solving complex problems. In a heterogeneous multi-agent system, agents possess diverse capabilities, from navigation and object manipulation to resource handling. Optimizing the efficiency and productivity of such systems requires strategic task assignment, workload balancing, and improved cooperation between agents.

Task Assignment Strategies for Heterogeneous Multi-Agent Systems Effective task assignment is critical in ensuring that each agent’s unique capabilities are leveraged to their fullest potential. Let’s dive into some of the most popular approaches for assigning tasks within a heterogeneous MAS:

- Centralized Task Assignment In a centralized task assignment system, a central controller decides which agent performs which task based on their capabilities and availability. This method ensures a high level of coordination, as tasks are carefully distributed, but it can become a bottleneck in large, complex systems where quick decision-making is essential. Advantages: Strong coordination and task control. Disadvantages: Scalability issues, slower decision-making in large systems.

- Decentralized Task Assignment In decentralized task assignment, agents autonomously decide which tasks to undertake. They rely on local information and communicate with other agents to optimize their decisions. This approach is highly scalable and less prone to central bottlenecks, but it requires complex decision-making algorithms to ensure tasks are allocated efficiently. Advantages: Scalability and flexibility in task assignment. Disadvantages: May require sophisticated algorithms to avoid inefficiencies.

- Market-Based Task Assignment A market-based task assignment system introduces competition among agents. Agents bid on tasks based on their capabilities and current workload, and the highest bidder takes the task. This system ensures that tasks are assigned to the most suitable agent, promoting resource efficiency. Advantages: Promotes competition and resource optimization. Disadvantages: Complex to implement and may create unequal task distribution.

Optimizing Task Assignment with Real-World Examples

- Autonomous Vehicles: Autonomous driving systems use decentralized task assignment to make real-time decisions about navigation, route optimization, and avoiding collisions.

- Robotic Assembly Lines: In manufacturing, market-based task assignment ensures robots handle specialized tasks, like welding, quality checks, or material transport.

Understanding Agent Skill Sets and Capabilities Optimizing workflows in a heterogeneous MAS requires a deep understanding of agent capabilities. Typically, agents in these systems have specialized skill sets, which include:

- Navigation: Agents adept at navigating complex environments are ideal for tasks that require spatial awareness.

- Object Manipulation: Agents with strong object-handling skills are perfect for tasks requiring precision in assembly, sorting, or manipulation.

- Resource Management: Some agents specialize in managing resources like energy or raw materials, ensuring efficient system operation.

- How to Optimize Agent Capabilities: Use skill-specific task assignment: Match agents to tasks that align with their strengths. Monitor agent performance metrics: Track each agent’s efficiency in their specialized role and adjust task assignments as needed.

- Practical Application: In healthcare systems, different agents handle specialized tasks such as patient monitoring, data analysis, and medication delivery, optimizing patient care and system performance.

Methods for Balancing Workloads in Multi-Agent Systems

Workload balancing is critical to preventing bottlenecks and ensuring all agents are utilized efficiently. When some agents are overloaded while others remain idle, system performance suffers. Below are methods that can help balance workloads across agents:

- Load Balancing Algorithms Load balancing algorithms dynamically allocate tasks to ensure that no single agent is overwhelmed. By continuously monitoring task progress and redistributing tasks as needed, these algorithms help maintain system efficiency. Advantage: Reduces agent idle time and prevents overload. Example: In an e-commerce fulfillment center, load balancing ensures that no single robot is overwhelmed with package deliveries, while others remain underutilized.

- Priority Queuing Priority queuing assigns tasks based on their urgency. Critical tasks are processed first, ensuring that essential functions are carried out without delay. Advantage: Ensures that urgent tasks are prioritized and completed first. Example: In autonomous vehicle fleets, critical safety maneuvers are given top priority, while non-urgent tasks like route optimization are queued for later.

- Adaptive Scheduling Adaptive scheduling continuously monitors agent workloads and makes real-time adjustments to task assignments. This dynamic process ensures that tasks are reassigned as agent workloads change, leading to better system performance. Advantage: Real-time adaptability prevents performance dips and ensures efficient resource use. Example: In large-scale AI applications like smart cities, adaptive scheduling can reroute agents in real-time to avoid congested areas or overloaded resources.

Improving Cooperation Between Specialized Agents Cooperation between agents with diverse capabilities is essential for achieving complex goals in a heterogeneous MAS. Strong collaboration allows agents to leverage each other’s strengths, working together to accomplish tasks more efficiently.

- Communication Protocols Establishing clear and effective communication protocols enables agents to share data, status updates, and task progress. Good communication helps agents coordinate their actions and avoid conflicts. Benefit: Improved coordination and resource sharing. Example: In drone swarms, communication protocols allow drones to share information about obstacles, ensuring smooth navigation and task completion.

- Collaboration Frameworks Collaboration frameworks define the roles and responsibilities of each agent, ensuring that all agents understand their part in completing the overall system objectives. By formalizing interaction patterns, agents can work together more effectively. Benefit: Streamlined interactions and role clarity. Example: In healthcare robotics, collaboration frameworks assign specific roles, such as surgery assistance or patient monitoring, ensuring efficient task distribution.

- Learning Mechanisms Implementing learning mechanisms enables agents to learn from past experiences and improve their cooperative behaviors over time. By receiving feedback and adjusting their strategies, agents can enhance their future performance. Benefit: Continual improvement in agent collaboration. Example: In autonomous vehicle fleets, vehicles learn from previous traffic patterns and accidents to improve cooperation in avoiding collisions and traffic congestion.

Optimizing workflows in heterogeneous multi-agent systems requires a thoughtful combination of task assignment strategies, workload balancing, and improving cooperation between agents. By understanding the unique capabilities of each agent, leveraging appropriate task assignment methods, and fostering better cooperation, systems can achieve higher efficiency and productivity.

Whether you’re developing AI-driven robotics or improving healthcare systems, these strategies provide a solid foundation for creating effective and optimized multi-agent systems that can tackle the challenges of today’s world.

Climate Change Poster Collection of the Week – Methanogenesis

This week’s Climate Change Poster Collection highlights Methanogenesis, the biological process by which certain microorganisms, known as methanogens, produce methane as a metabolic byproduct in anaerobic conditions. This process is a key component of the global carbon cycle and has profound implications for climate change. Methanogens are a type of archaea, a distinct domain of life separate from bacteria and eukaryotes, and they thrive in environments devoid of oxygen, such as wetlands, rice paddies, landfills, and the digestive tracts of ruminants. Their activity is particularly notable in permafrost regions, where vast amounts of organic material have been locked away for millennia. As global temperatures rise due to climate change, permafrost is beginning to thaw, exposing this organic material to microbial decomposition. When methanogens break down this organic matter, they produce methane—a greenhouse gas that is approximately 25 times more effective at trapping heat in the atmosphere than carbon dioxide over a 100-year period.

The implications of methanogenesis for climate change are multifaceted and deeply concerning. The release of methane from thawing permafrost creates a feedback loop: as more permafrost thaws, more methane is released, further accelerating global warming and leading to even more permafrost thaw. This positive feedback mechanism is a significant concern for climate scientists because it has the potential to rapidly amplify the effects of climate change. Unlike carbon dioxide, which can be absorbed by oceans and forests, methane persists in the atmosphere for a shorter period but has a much more potent warming effect during its lifespan. This makes the regulation of methane emissions a critical component of climate mitigation strategies.

Moreover, methanogenesis in wetlands and rice paddies also contributes to atmospheric methane levels. Wetlands are the largest natural source of methane, and they play a dual role in the carbon cycle. On one hand, they act as carbon sinks, sequestering carbon dioxide through plant growth. On the other hand, the anaerobic conditions in waterlogged soils are ideal for methanogens, making wetlands significant methane emitters. Similarly, rice paddies, which are essentially artificial wetlands, are a major source of methane due to the anaerobic decomposition of organic material in flooded fields. Human activities such as agriculture and waste management further exacerbate this issue. Livestock farming, particularly ruminants like cows and sheep, contributes significantly to methane emissions through enteric fermentation—a digestive process in which methanogens in the stomachs of these animals break down food and produce methane as a byproduct.

Understanding methanogenesis is therefore crucial for developing accurate climate models and effective mitigation strategies. Research into this process can help identify ways to manage methane emissions, such as through changes in agricultural practices, waste management improvements, and even potential interventions in permafrost regions. For instance, altering livestock diets to reduce enteric fermentation, improving manure management practices, and adopting rice cultivation techniques that minimize waterlogged conditions can all contribute to reducing methane emissions. In landfill management, capturing methane for use as a renewable energy source can both reduce greenhouse gas emissions and provide a sustainable energy solution.

Furthermore, studying the microbial ecology of methanogens can lead to innovative approaches to mitigate methane emissions. For example, understanding the environmental conditions that favor methanogenesis can inform strategies to disrupt these conditions and reduce methane production. Biotechnological advances could also enable the development of microbial treatments that inhibit methanogens or promote the activity of microbes that consume methane, known as methanotrophs.

The role of methanogenesis in climate change underscores the importance of addressing the interconnectedness of natural processes and human activities. By shedding light on the intricate connections between microbial processes and climate dynamics, we can better address the multifaceted challenges posed by global warming and work towards a more sustainable future. This holistic understanding is essential for crafting policies and practices that not only mitigate climate change but also promote resilience and adaptation in the face of its inevitable impacts. In conclusion, methanogenesis is a critical but often overlooked aspect of the climate change puzzle, and addressing it requires a concerted effort that spans scientific research, technological innovation, and policy development.

Discover an inspiring collection of climate change poster.

Custom LLM Training for Tailored AI Solutions

Introduction to Custom LLM Training

Definition and Overview

Custom LLM Training involves adapting large language models to specialized datasets unique to an organization. By doing so, the models can better understand and generate content relevant to specific business needs. This process refines pre-trained models on new, domain-specific data, making them highly proficient in understanding the context and language nuances of that domain.

Importance in Modern Organizations

In the era of information overload, having models tailored to specific domains aids in filtering and retrieving relevant information efficiently. This customization leads to highly accurate results, boosting productivity and decision-making. Organizations can leverage custom LLMs to automate numerous tasks, from customer service chatbots to complex data analysis, thereby freeing up human resources for more strategic functions.

Benefits of Custom LLM Training

- Enhanced Relevance

Custom LLMs are fine-tuned to understand the nuances and specific jargon of a particular field, leading to more relevant and precise outputs. This relevance is crucial for applications where generic models might miss subtle yet significant details, such as in legal document analysis or medical diagnostics. - Improved Accuracy in Information Retrieval

By training models on specific datasets, the accuracy of information retrieval is significantly improved, as the model is more aligned with the context and requirements of the domain. For instance, a custom LLM trained on financial data can more accurately interpret and generate financial reports, forecasts, and analyses. - Cost and Time Efficiency

Custom LLMs reduce the time and cost associated with manual data processing by automating and optimizing the retrieval and generation of relevant information. This efficiency translates to faster decision-making and reduced operational costs, as seen in industries like customer service, where chatbots can handle a large volume of inquiries with minimal human intervention. - Competitive Advantage

Organizations that leverage custom LLMs can gain a competitive edge by accessing and utilizing information more effectively than their competitors. This advantage is particularly evident in sectors like e-commerce, where personalized customer interactions can lead to higher satisfaction and loyalty.

Steps to Implement Custom LLM Training

- Data Collection and Preparation

Gather and preprocess the specific datasets that the model will be trained on. This includes cleaning the data and ensuring it is representative of the domain. High-quality data is critical as it directly impacts the model’s performance. Techniques such as data augmentation and balancing can be employed to enhance the dataset’s robustness. - Model Selection

Choose an appropriate pre-trained LLM as the base model. Popular choices include GPT-3, BERT, and other transformer-based models. The selection depends on the specific requirements of the task, such as the need for natural language understanding or generation capabilities. - Training and Fine-Tuning

Fine-tune the selected model using the prepared dataset. This involves adjusting the model’s parameters to better fit the specific data. Fine-tuning can be computationally intensive, requiring powerful hardware or cloud-based solutions to handle the large-scale data processing. - Evaluation and Validation

Evaluate the model’s performance using a separate validation dataset to ensure it meets the required accuracy and relevance standards. Metrics such as precision, recall, F1-score, and others are used to assess the model’s effectiveness. Iterative testing and refinement are often necessary to achieve optimal results. - Deployment and Monitoring

Deploy the model into the production environment and continuously monitor its performance to ensure it remains effective over time. This involves setting up monitoring systems to track the model’s outputs and incorporating feedback loops to update the model as needed. Regular retraining with new data ensures that the model stays relevant and accurate.

Case Studies of Custom LLM Training

- Successful Implementations in Industry

Various industries, such as healthcare, finance, and legal, have successfully implemented custom LLM training to enhance their information retrieval systems. For example, in healthcare, custom LLMs have been used to analyze patient records, leading to improved diagnostic accuracy and personalized treatment plans. - Lessons Learned

Key lessons from these implementations include the importance of high-quality data and the need for continuous monitoring and updates to the model. Organizations have discovered that investing in robust data pipelines and infrastructure is crucial for the long-term success of custom LLM projects. - Impact on Business Outcomes

Organizations have reported significant improvements in efficiency, accuracy, and overall business outcomes as a result of custom LLM training. For instance, a legal firm using a custom LLM for document review significantly reduced the time and cost associated with due diligence processes, enabling faster transaction closures and higher client satisfaction.

Challenges and Solutions in Custom LLM Training

- Data Quality and Quantity

Challenge: Ensuring the dataset is comprehensive and of high quality.

Solution: Implement rigorous data preprocessing and validation steps. Utilize techniques such as data augmentation to enhance the dataset and ensure it covers a wide range of scenarios. - Computational Resources

Challenge: Training large models requires substantial computational power.

Solution: Utilize cloud services and distributed computing to manage resource demands. Many cloud providers offer scalable solutions that can handle the intensive computational requirements of LLM training. - Ethical and Legal Considerations

Challenge: Addressing privacy concerns and adhering to legal regulations.

Solution: Implement robust data governance policies and ensure compliance with relevant laws. Transparency in data usage and incorporating ethical AI principles are essential to maintain trust and integrity. - Strategies to Overcome Challenges

Adopt a phased approach, starting with pilot projects to identify and mitigate potential issues before full-scale implementation. Engage cross-functional teams to address technical, ethical, and operational challenges comprehensively.

Custom LLM Training offers significant benefits, including enhanced relevance, improved accuracy, and efficiency in information retrieval. Although challenges exist, they can be effectively managed with the right strategies, leading to substantial improvements in organizational performance. By investing in custom LLMs, organizations can harness the power of tailored AI to drive innovation and maintain a competitive edge in their respective industries.

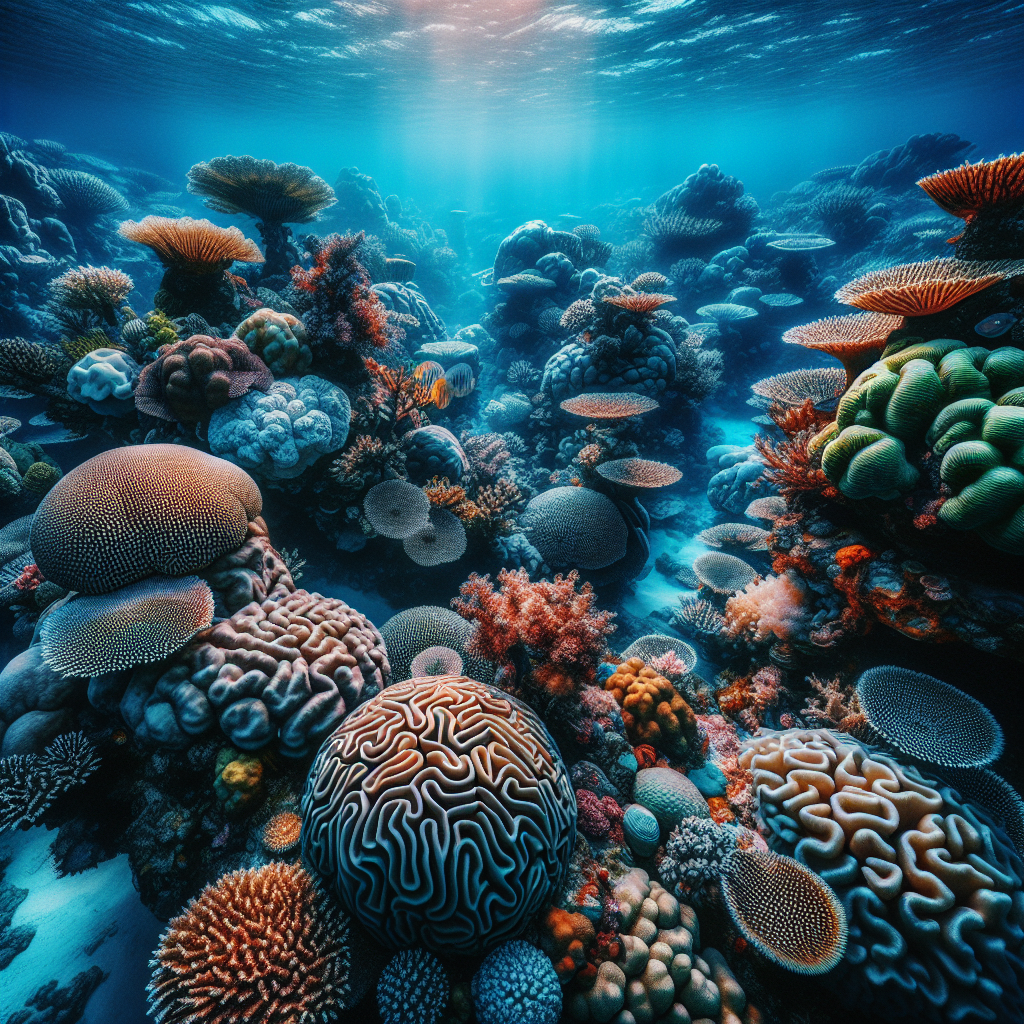

Hawaiian Coral’s Adaptability to Climate Change

Scientists at the UH Hawaiʻi Institute of Marine Biology have made a significant breakthrough in understanding the adaptability of eight common species of Hawaiian coral to ocean warming and acidification. The study revealed that these coral species could survive under “low climate change scenarios,” which involve a global reduction in carbon dioxide emissions.

This research emphasizes the need for climate change mitigation to ensure the survival of coral reefs. The study found that none of the studied species are likely to adapt quickly enough to a high rate of climate change. The key to their survival lies in the reduction of carbon dioxide emissions on a global scale.

Interestingly, the study discovered that a significant portion of the corals’ ability to withstand acidic and warmer conditions is inherited. This suggests that the ability to survive future ocean conditions can be passed down to future generations, offering a glimmer of hope for the long-term survival of these precious marine organisms.

Contrary to most predictions of coral reef collapse due to climate change, this study offers hope for their preservation through evidence of coral adaptability. The Hawaiʻi Institute of Marine Biology (HIMB) found that eight common species of coral found in Hawaiʻi and throughout the Indo-Pacific region can survive under conditions that reflect a global reduction in carbon dioxide emissions.

The study reiterates the importance of reducing carbon dioxide emissions for the survival of coral reefs. None of the species studied could withstand a “business-as-usual” carbon dioxide emissions scenario. This emphasizes that our current approach to carbon dioxide emissions is simply not sustainable if we are to ensure the survival of these vital marine ecosystems.

The study’s unexpected findings suggest that there is still a chance to preserve coral reefs despite most predictions of their demise due to the inability of corals to adapt quickly enough to climate change. The findings from the UH Hawaiʻi Institute of Marine Biology provide a hopeful perspective on the potential resilience of coral species, highlighting the importance of continued research and action in mitigating climate change.

Science4Data is committed to cut through greenwashing and measure real impact. Join the journey to a sustainable future. Your actions matter.

The Power of Generative AI for Content Moderation

The rapid growth of the internet and digital communication has brought many benefits, but it has also introduced significant challenges, particularly in keeping online environments safe. Multi-Agent AI, which uses multiple generative agents, offers a promising solution by analyzing and flagging inappropriate or harmful content in real-time.

Limitations of Traditional Content Moderation

Traditional content moderation methods often rely heavily on human moderators and simple algorithms. These approaches can be slow, inconsistent, and prone to human error. Human moderators can experience burnout due to the constant exposure to harmful content, while basic algorithms may miss nuanced or emerging threats. This makes it difficult to maintain a safe and welcoming online environment at scale.

Human moderators, despite their best efforts, are often overwhelmed by the sheer volume of content that needs to be reviewed. This can lead to delays in identifying and removing harmful content, allowing it to spread and potentially cause harm before action is taken. Furthermore, the emotional toll on human moderators who are constantly exposed to graphic, violent, or otherwise disturbing material cannot be understated. This can lead to high turnover rates and a constant need for training new moderators, which is both time-consuming and costly.

Simple algorithms, on the other hand, lack the sophistication needed to understand context. For instance, an algorithm might flag a post containing certain keywords as harmful, even if it is being used in a non-offensive or educational context. Conversely, more subtle forms of harmful content, such as coded language or emerging threats that have not yet been identified, can slip through the cracks. This inconsistency can undermine the credibility of the moderation process and the overall safety of the platform.

What is Multi-Agent AI?

Multi-Agent AI involves multiple autonomous agents working together to achieve complex tasks. Unlike single-agent systems, this approach is more robust and scalable, making it ideal for dynamic platforms like social media platforms. Each agent operates semi-independently but collaborates with the others to improve efficiency and accuracy.

By breaking down complex problems into smaller tasks, Multi-Agent AI systems can handle various challenges more effectively. For example, one agent may specialize in sentiment analysis, another in image recognition, and yet another in spam detection. By sharing their findings, these agents perform a comprehensive analysis, improving the system’s resilience and adaptability to new content and threats.

The collaborative nature of Multi-Agent AI allows for a more nuanced and thorough approach to content moderation. Each agent can leverage its specialized expertise to identify specific types of harmful content, and then share this information with the other agents. This creates a network of knowledge that can be used to improve the overall accuracy and effectiveness of the system. Additionally, the semi-independent operation of each agent allows the system to continue functioning even if one agent encounters an issue, ensuring continuous and reliable moderation.

The Role of Generative AI in Content Moderation

Generative AI agents are a special type of AI that can create new data based on patterns they’ve learned. In content moderation, these agents can generate synthetic harmful content to improve training algorithms. They can also simulate user behavior to predict and identify risks. This helps the system recognize and respond to various forms of harmful content, from hate speech to misinformation.

For instance, generative AI agents use techniques like Generative Adversarial Networks (GANs) to create realistic examples of offensive content. By exposing the system to a wide range of harmful material, these agents improve its ability to detect subtle and emerging threats. This proactive approach enables the system to not only identify known harmful content but also adapt to new forms as they arise.

Generative AI can also be used to create a diverse range of training data that includes various forms of harmful content that may not be present in existing datasets. This can help address the issue of bias in AI algorithms by ensuring that the system is trained on a more representative sample of content. Additionally, generative AI can simulate the behavior of malicious users, allowing the system to anticipate and counteract new tactics that may be used to spread harmful content.

Real-Time Analysis and Flagging

Real-time analysis is essential for effective content moderation. Multi-Agent AI systems use techniques such as natural language processing (NLP) and computer vision to examine content as it is uploaded. These systems can flag questionable material in milliseconds, enabling human moderators to review and take action quickly. This real-time capability is crucial for platforms with high user activity, where harmful content can spread rapidly.

Through continuous monitoring of user-generated content, NLP allows the system to interpret text and identify harmful language, while computer vision scans images and videos for inappropriate material. Once flagged, the content is prioritized for human moderation, ensuring swift responses. This capability is especially important for live-streaming platforms and social media platforms, where harmful content can have an immediate and widespread impact.

The ability to analyze and flag content in real-time is particularly important for preventing the spread of harmful content during live events. For example, during a live-streamed event, harmful content can be broadcast to thousands or even millions of viewers in a matter of seconds. Real-time analysis allows the system to detect and flag this content immediately, preventing it from reaching a wider audience and potentially causing harm. This is especially critical for platforms that cater to younger audiences, who may be more vulnerable to the effects of harmful content.

Creating Safer Online Spaces

The main goal of using Multi-Agent AI in content moderation is to create safer online spaces. By efficiently detecting and mitigating harmful content, these systems protect users from exposure to offensive material. This proactive approach not only enhances the user experience but also ensures compliance with community guidelines and platform’s community guidelines, fostering trust and reliability in digital platforms.

Creating safer online spaces is not just about removing harmful content, but also about fostering a positive and inclusive environment for all users. Multi-Agent AI can help achieve this by identifying and promoting positive content, as well as flagging and removing harmful material. For example, the system can prioritize content that promotes healthy discussions and respectful interactions, while filtering out content that is likely to cause harm or offense. This can help create a more welcoming and supportive community, where users feel safe and valued.

Mitigating Biases and Fairness Issues

Despite its advantages, Multi-Agent AI faces challenges. Ethical concerns, such as bias in AI algorithms and the risk of over-censorship, remain significant issues. Future development will need to focus on improving the transparency and accountability of these systems, as well as exploring advanced technologies like blockchain for better traceability. Continuous research and innovation are critical to addressing these challenges and adapting to the ever-changing landscape of online communication.

One of the key challenges is preventing AI algorithms from perpetuating biases or introducing new ones. This requires diverse training data and fairness-aware algorithms. Another issue is balancing moderation and free speech, avoiding over-censorship that could stifle legitimate expression. Future efforts may focus on hybrid systems combining AI with human expertise to ensure both fairness and efficiency. Additionally, technologies like blockchain could make moderation decisions more transparent, giving users clearer insights into why their content was flagged or removed.

Addressing biases in AI algorithms is a complex and ongoing process. It requires a commitment to diversity and inclusion at every stage of development, from the selection of training data to the design and testing of the algorithms. This includes actively seeking out and incorporating feedback from diverse user groups, as well as regularly auditing the system to identify and address any biases that may emerge. Additionally, transparency and accountability are crucial for building trust with users. This means providing clear explanations for moderation decisions and allowing users to appeal or challenge those decisions if they believe they were made in error.

The Future of Content Moderation

As technology evolves, the future of content moderation will likely see a blend of AI and human oversight. Advanced AI systems will handle the bulk of real-time analysis and flagging, while human moderators will make nuanced decisions that require contextual understanding. This hybrid approach aims to balance efficiency and fairness, ensuring that moderation practices are both effective and just.

The future of content moderation will likely see the development of even more sophisticated AI systems that can handle increasingly complex and nuanced forms of harmful content. This may include the use of advanced machine learning techniques, such as deep learning and reinforcement learning, to improve the system’s ability to understand context and make accurate decisions. Additionally, the integration of AI with other emerging technologies, such as virtual and augmented reality, could open up new possibilities for content moderation in immersive digital environments.

Multi-Agent AI is revolutionizing content moderation, offering enhanced capabilities for real-time analysis and flagging of harmful content. While this technology promotes safer online spaces, ongoing efforts are needed to address its challenges and ensure its ethical use for broader applications.

Connect with our expert to explore the capabilities of our latest addition, AI4Mind Chatbot. It’s transforming the social media landscape, creating fresh possibilities for businesses to engage in real-time, meaningful conversations with their audience.